Astha Anand

An Expert Answering System for Pest Control Decisions

Astha Anand explains how she developed and evaluated an expert question answering system in the domain of agricultural pest control for her Master's thesis.

Background

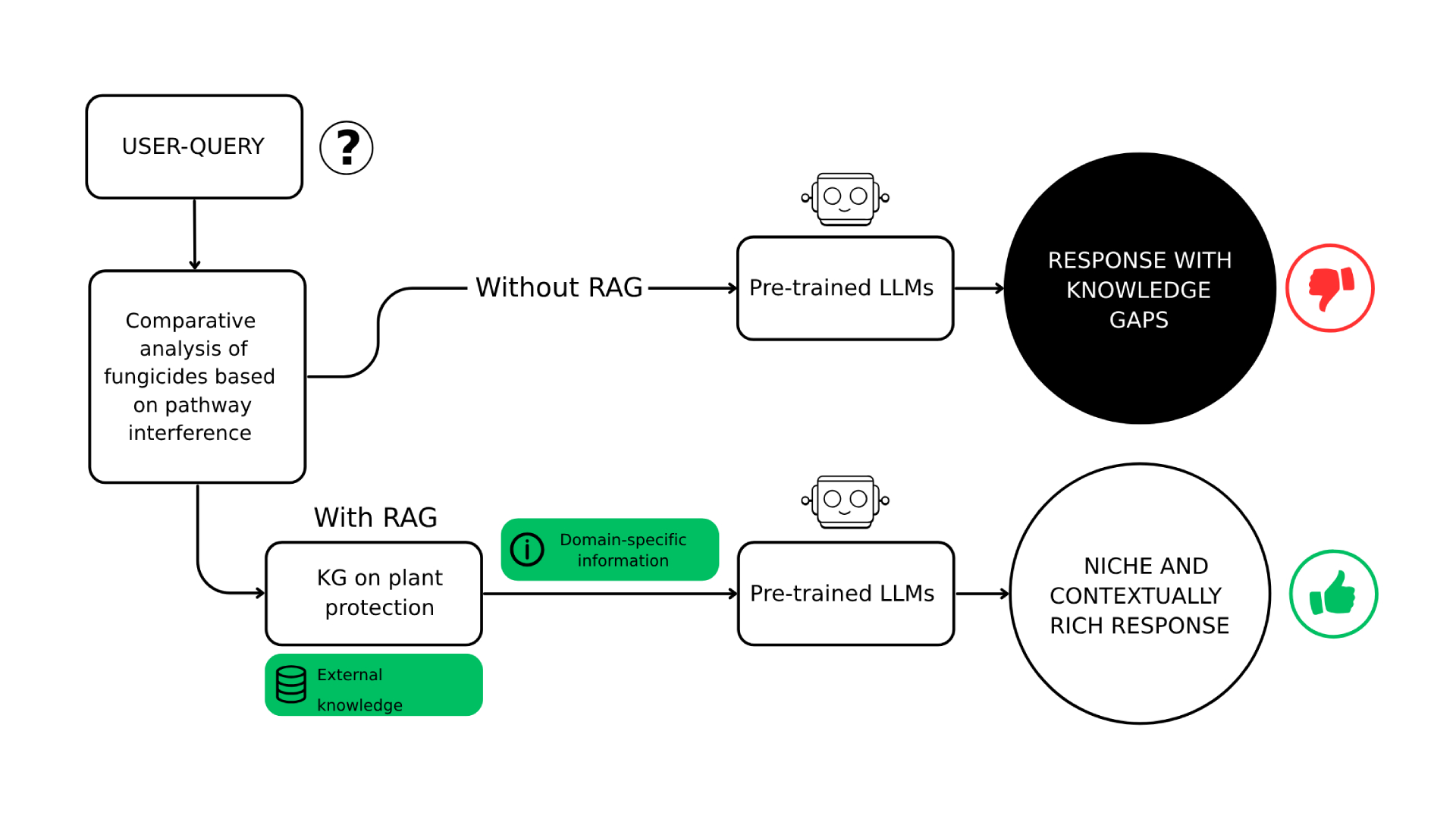

The field of plant protection is of paramount importance because it directly influences agricultural productivity, food quality, and economic stability. This work was inspired by a real case of apple rust [4], a fungal disease in apples. Farmers previously used Asomate (an organo-arsenic fungicide [3]), now banned for toxicity. To fill this gap, we propose Retrieval-Augmented Generation (RAG) based expert Question Answering (QA) systems that offer quick pest control advice using plant protection knowledge graphs (KGs) and AI. As the name suggests, RAG consists of retrieval, augmentation, and generation [2, 1]. Retrieval involves extraction of context-specific information from open or closed knowledge sources [1]. Generation involves producing a detail-rich response from the retrieved entities via white-box (parameter-accessible, like T5) or black-box (parameter-inaccessible, like GPT models) generators [1]. Augmentation refers to the integration of retrieved information into the generator to refine the final output [1].

Methods

Knowledge Graph Construction

To build a pest control expert QA system, a domain-specific KG was constructed to capture interactions such as chemical-disease management, vector transmission, and plant immune responses. Chemical data was manually curated from PubChem, ChEBI (Chemical Entities of Biological Interest), and PPDB (Pesticides Properties DataBase). Molecules involved in pathogen recognition and immunity in Malus domestica were sourced from Plant Reactome. Information on cedar apple rust, including hosts, causative agents, management and induced mechanisms, was collected from expert-recommended articles and NCBI (National Center for Biotechnology Information).

Designing the QA System

Diverse questions were crafted to evaluate the QA system’s coverage and accuracy, ensuring clarity and enabling iterative refinement of the pipeline.

Development of Expert Answering Systems for Pest Control Decisions

1. RAG pipeline for development of the Embedding Similarity Based Expert Answering System (ESBEAS)

The process begins when a user submits a natural language question. To retrieve relevant context, text embeddings are generated for both the query and KG entries using models like OpenAI’s text-embedding-ada-002. Cosine similarity is computed between the query and each KG entry embeddings to measure relevance. The top-matching KG entries are selected as context for augmentation. These are then passed to GPT-4 Turbo, which generates a detailed and domain-specific response, refined using role prompting and temperature control to ensure precision. For implementation, see https://github.com/SCAI-BIO/SEEDS.

2. RAG pipeline for development of the Cypher Generating Expert System (CGES)

The process begins when a user submits a natural language question. This question is merged with a detailed prompt template that includes the KG schema, relevant examples, and specific instructions –guiding the language model to generate an accurate Cypher query. The LLM then converts the question into a Cypher query suitable for querying the KG. Upon execution, relevant data is retrieved from the plant protection KG. This information is fed back into the LLM to provide domain-specific context. Based on this, the model generates a clear and technically accurate natural language response. Finally, the user can approve or disapprove the answer; approved examples are stored to improve future responses through few-shot learning.

Explainable AI for the Expert Answering Systems

ESBEAS: KG entries were clustered using MeSH terms, and visualized via PCA/t-SNE, with an interactive app to explore top-ranked results – enhancing transparency. CGES: A Dash interface displays Cypher queries, outputs, and LLM prompts, with feedback-enabled iterative refinement and prompt-level transparency to support explainability and detect bias.

Results and Conclusion

Both systems, ESBEAS and CGES, performed efficiently in answering the test questions. The ESBEAS effectively ranked relevant KG data and generated accurate and informative responses. CGES successfully constructed correct Cypher queries for all test questions and produced detailed answers using the retrieved KG data. A plant expert evaluated the outputs and found that 5 out of 7 answers from ESBEAS were more precise and accurate compared to those from CGES. The use of RAG in these systems helped minimize hallucinations commonly seen in general-purpose QA systems. CGES is particularly valuable for plant experts lacking Cypher knowledge, enabling intuitive interaction with knowledge graphs. Future work includes expanding the KG, adding visual QA via symptom images, and enhancing user experience with caching, memory, and dual-format responses.

Citations

[1] Yujuan Ding, Wenqi Fan, Liangbo Ning, Shijie Wang, Hengyun Li, Dawei Yin, Tat-Seng Chua, and Qing Li. A Survey on RAG Meeting LLMs: Towards Retrieval-Augmented Large Language Models. ArXiv preprint arXiv:2405.06211, 2024.

[2] Patrick Lewis, Ethan Perez, et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. Advances in Neural Information Processing Systems, 33:9459-9474, 2020.

[3] Asomate. In: Lewis K, Tzilivakis J, Green A, Warner D. Pesticide Properties DataBase (PPDB) University of Hertfordshire. 2006. URL: https://sitem.herts.ac.uk/aeru/ppdb/en/Reports/1510.htm

[4] Rebecca Koetter and Michelle Grabowski. Cedar-apple rust and related rust diseases. University of Minnesota Extension, 2024. URL: https://extension.umn.edu/plant-diseases/cedar-apple-rust