Software and Scientific Computing

The Software and Scientific Computing group develops algorithms and software tools that enable the quick discovery and exploration of knowledge in structured and unstructured freely available sources.

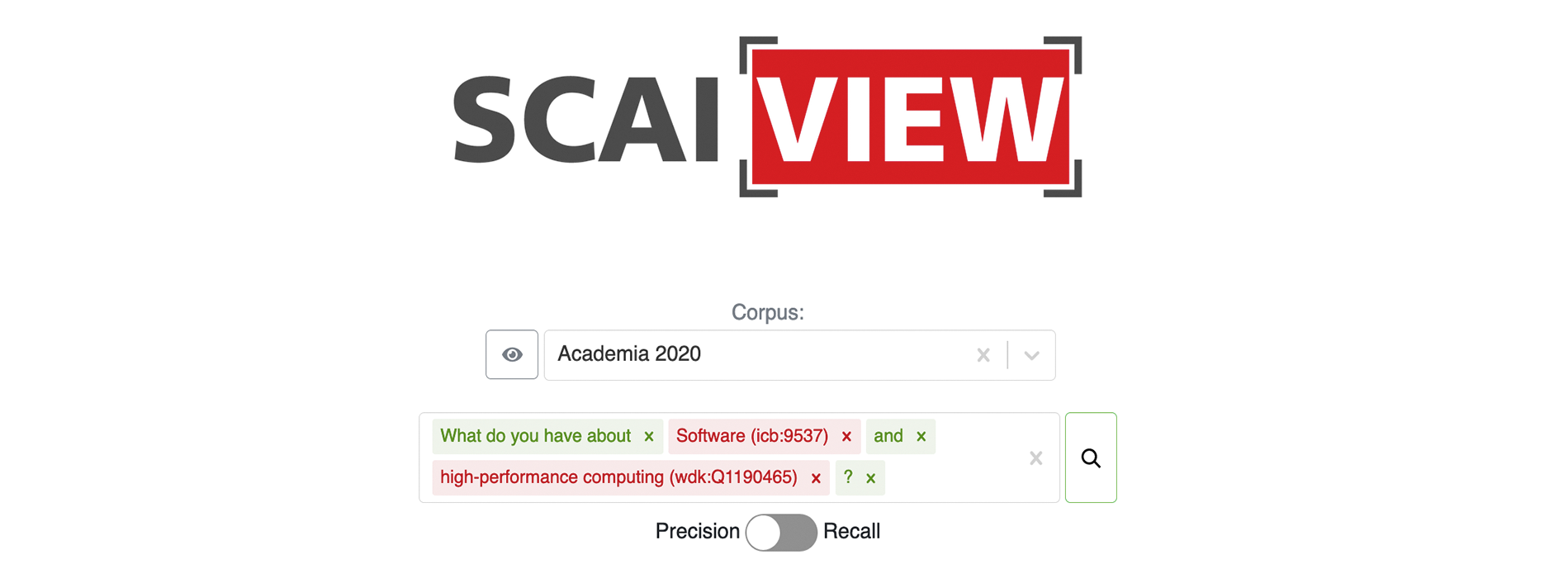

When browsing scientific literature, conducting database research, or browsing online media, we often wonder, "Could this be true?" or "What is the current state of knowledge?" When using portals to search the web, we have to sift through long lists of results. We conduct research on distributed information systems that aim to provide ad hoc answers to such questions. This goes far beyond keyword-based searches.

In our Data Center, both structured databases (such as proteins, chemicals, drugs, clinical studies) and vast collections of unstructured documents (such as research articles, patents, package inserts) are integrated. The goal is to connect different sources into highly complex knowledge graphs by automatically detecting and normalizing concepts and their relationships.

We use modern information extraction techniques to automatically identify mentions of concepts (including synonyms and abbreviations) and establish relationships among them (relation mining) using terminologies and ontologies. The collected knowledge is stored in federated graph databases or triple stores, allowing experts from various fields (such as biomedicine, pharmacy, chemistry, biotechnology) to query it. We rely on modern big data architectures, Semantic Web technology, and state-of-the-art artificial intelligence methods (such as Large Language Models LLM). We primarily develop and use open-source software solutions (such as Kubernetes, Apache Spark, Apache Spring, REACT) and leverage standardized interfaces (such as OpenAPI, OAuth) that we customize and extend (see https://github.com/SCAI-BIO).

Our offerings are diverse. We:

- Provide consulting and training on information extraction, knowledge graph modeling, setting up and operating scalable microservice architectures in the big data environment, digitization, and data preparation.

- Develop graphical analysis tools and application programming interfaces (APIs) and customize them according to customer requirements.

- Participate in national and international research projects.

- Collaborate with industry partners and undertake commissioned work.

- Offer and supervise exciting research projects (practical projects, bachelor's/master's theses).

We have:

- An excellent infrastructure (data center, computing center, fast connectivity)

- Expertise in chemistry, molecular biology, biomedicine, pharmacology, computer science, and mathematics.

- A broad spectrum of computational expertise.

Selected Publications

- Babaiha, Negin Sadat, et al. "A Natural Language Processing System for the Efficient Updating of Highly Curated Pathophysiology Mechanism Knowledge Graphs." Artificial Intelligence in the Life Sciences (2023): 100078.

- Sargsyan, Astghik, et al. "The Epilepsy Ontology: a community-based ontology tailored for semantic interoperability and text mining." Bioinformatics Advances 3.1 (2023): vbad033.

- Lage-Rupprecht, Vanessa, et al. "A hybrid approach unveils drug repurposing candidates targeting an Alzheimer pathophysiology mechanism." Patterns 3.3 (2022).

- Wegner, Philipp, et al. "Common data model for COVID-19 datasets." Bioinformatics 38.24 (2022): 5466-5468.

- Schultz, Bruce, et al. “Integration of adverse outcome pathways with knowledge graphs”, International Congress of Toxicology (ICT), Maastrich, poster session (2022)